preparation for migrating data to Oracle Virtual Private Database

Introduction

Recently I was part of a team involved in the preparation of migration data belonging to multiple business units into a single Oracle 19c database with Virtual Private Database (VPD). The VPD solution is used for the virtual separation of the data per business unit.

In this article I will be describing some of the issues that we encountered when preparing for the migration of ‘similar’ business data into one target Oracle database that has Virtual Private Database enabled. Possible solutions for these issues are described as well. Similar in this case means that the source databases contain identical schemas and only differ in the data that is stored in the various tables. Each single database contains the data of one specific business unit.

After migration, all the data retrieved from the source databases will be housed in one target database. The data will be virtually separated per business unit, which is done by means of the Oracle Virtual Private Database functionality.

The aim for this migration is a huge cost saver for the business. For reasons, think of the decrease in Oracle license costs, but also the less effort needed from the support department because they will now only have to manage one database instead of multiple. Other gains will or can be that software only needs to be enrolled once. Instead of provisioning software to multiple separate databases that are in operation for the different business units, this only needs to be done once now. And so, the business units will be using the same (latest) software versions, unless for business reasons otherwise decided upon.

Quick links to the parts of this article:

Virtual Private Database

How this VPD example works

Example

Enabling the virtual private database mechanism

Loading the data of a business unit into the target VPD database

Cleanup after loading the data

Some of the issues to address

Data structures

Primary and foreign key constraint definitions

Sequences

Unique indexes

Function based indexes

Unique key constraints

Unique key constraints and indexes that are based on nullable columns

Case vs Decode

Columns that have the Long type

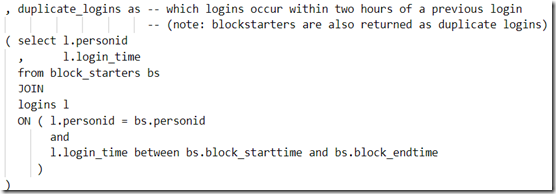

Loading the data

Virtual columns

Result and wrap up

Virtual Private Database

Oracle Virtual Private Database creates security policies to control database access at the row and column level. It was introduced with Oracle Database version 8i and is still available in current versions today (at the time of writing Oracle database 19c). A simple example is a VPD that might only restrict access to data during business hours. A more complex example concerns a VPD that accesses/reads an application context via a login trigger and enforce row level security against the different tables that are in the database schemas. The latter is what will be addressed in this article.

How this VPD example works

So, the data belonging to the different business units will be loaded into one VPD enabled database. Before loading the data, each table in this scenario will be enhanced once with an additional column that will be indicating the business unit that owns the data. This column will be named BUSINESS_UNIT_VPD_ID in the examples below.

A unique service will be defined once for each business unit that has its data loaded into the VPD enabled database. Connections to the database for a specific business unit is made via this dedicated service. This makes it possible to have a logon trigger fired upon each connection made, which sets a unique value bound for this business unit into the session context.

The value for the BUSINESS_UNIT_VPD_ID column (which is added once to the tables part of the VPD solution) will be determined automatically through logic set as a default column value. This logic retrieves the value for the business unit from the session context mentioned above.

Example

In this example there exists 3 services and each service is for a specific business unit:

· Service1 is for/with business unit 100;

· Service2 is for/with business unit 200;

· Service3 is for/with business unit 300.

Suppose the VPD enabled database contains a table called EXAMPLE and contains the following data in it (as seen by the SYS user, who sees all data rows).

|

VPD_EXAMPLE

|

|

|

SOME_COLUMN

|

BUSINESS_UNIT_VPD_ID

|

|

Foo

|

100

|

|

Bar

|

200

|

|

Baz

|

300

|

Now, if a connection is made to Service1 with a user other than SYS, only the FOO data will be listed as a query result. The same applies for the other two services which will only list the Bar data and Baz data respectively.

Enabling the virtual private database mechanism

To enable the VPD mechanism on a database, a user that has the permissions to do so is needed. In the example below a user with the name VPD_ADMIN will be created and used for this.

For the creation of the VPD_ADMIN user connect to the target database as SYS AS SYSDBA.

CONN / AS SYSDBA

DEFINE vpdadminpw = 'SomeHardToGuessSecret'

Create a user that will be the admin for the VPD.

CREATE USER vpd_admin IDENTIFIED BY &vpdadminpw ACCOUNT UNLOCK

QUOTA UNLIMITED ON users

DEFAULT TABLESPACE users;

--

GRANT CONNECT, RESOURCE TO vpd_admin;

Grant the user the appropriate rights for creating VPD objects.

-- requirements for creating vpd objects

GRANT CREATE ANY CONTEXT, ADMINISTER DATABASE TRIGGER TO vpd_admin;

GRANT EXECUTE ON DBMS_SESSION TO vpd_admin;

GRANT EXECUTE ON DBMS_RLS TO vpd_admin;

-- requirements for managing services

GRANT EXECUTE ON dbms_service TO vpd_admin;

GRANT READ ON dba_services TO vpd_admin;

GRANT READ ON v_$active_services TO vpd_admin;

Now switch your connection to the vpd_admin user you just created.

CONNECT vpd_admin/&VPDADMINPW

Create a table for keeping track of the connections to the VPD enabled database.

CREATE TABLE connection_log (

username varchar2(32)

, os_user varchar2(32)

, remote_ip varchar2(16)

, service varchar2(32)

, found_business_unit_service_name varchar2(20)

, found_business_unit_vpd_id number(6)

, reason varchar2(80)

, log_datetime date;

Also create a table that binds service names to the VPD identifiers.

CREATE TABLE business_units (

business_unit_service_name varchar2(20)

, business_unit_vpd_id number(6)

, primary key(business_unit_service_name, business_unit_vpd_id)

);

With the business_units table in place, now add the service names and bounded business unit identifiers into it.

INSERT INTO vpd_admin.business_units (business_unit_service_name, business_unit_vpd_id) values ('Service1', 100);

INSERT INTO vpd_admin.business_units (business_unit_service_name, business_unit_vpd_id) values ('Service2', 200);

INSERT INTO vpd_admin.business_units (business_unit_service_name, business_unit_vpd_id) values ('Service3', 300);

COMMIT;

Then create and start the services, so these can be used to make connections with them.

EXEC DBMS_SERVICE.CREATE_SERVICE('Service1', 'Service1');

EXEC DBMS_SERVICE.CREATE_SERVICE('Service2', 'Service2');

EXEC DBMS_SERVICE.CREATE_SERVICE('Service3', 'Service3');

EXEC DBMS_SERVICE.START_SERVICE('Service1');

EXEC DBMS_SERVICE.START_SERVICE('Service2');

EXEC DBMS_SERVICE.START_SERVICE('Service3');

Also create the following function, which will be called from a policy that will be attached to each table that will be managed by the VPD mechanism.

CREATE OR REPLACE FUNCTION business_unit_vpd_policy_by_id

(p_schema IN VARCHAR2, p_table IN VARCHAR2)

RETURN VARCHAR2

AS

BEGIN

RETURN 'business_unit_vpd_id = SYS_CONTEXT(''business_unit_ctx'', ''business_unit_vpd_id'')';

END;

Attach a policy to each table that must have the VPD mechanism attached to it (so replace schema_name and table_name with actual values).

DBMS_RLS.ADD_POLICY(

object_schema => 'schema_name',

object_name => 'table_name',

policy_name => 'business_unit_vpd',

function_schema => 'vpd_admin',

policy_function => 'business_unit_vpd_policy_by_id',

policy_type => DBMS_RLS.CONTEXT_SENSITIVE,

namespace => 'business_unit_ctx',

attribute => 'business_unit_vpd_id'

);

Extend each of the tables that have the mentioned policy attached with an additional column BUSINESS_UNIT_VPD_ID, which may be hidden, and have it assigned the value of the business unit from the system context as a default.

ALTER TABLE table_owner.table_name

ADD (business_unit_vpd_id NUMBER INVISIBLE

DEFAULT sys_context('business_unit_ctx','business_unit_vpd_id')

NOT NULL

);

Loading the data of a business unit into the target VPD database

For loading the business units data into the target VPD database this had to be exported first. In the project that I was part of, the schemas that contained the data to be migrated were exported with the EXPDB utility. I will not go into further details on this utility, it is well documented on the internet.

The exported data of a business unit was then imported with the IMPDP utility with the names of the schemas altered (schema names were prefixed with a label (e.g., STG_). By doing so, the schemas don’t interfere with the target schemas that have the name originally. The renaming of the schema names during import was done by the REMAP_SCHEMA option/parameter of the IMPDP utility. Again, see the internet for further details on the usage.

Then custom made scripts were run to generate the statements to be used for loading the data into the target schemas from the ‘prefixed’ source schemas. The generated statement consists of the following:

- statements to disable all the active constraints on the to be loaded tables

- INSERT INTO .. AS SELECT FROM statements that queries the data from the source schemas (the ones that were ‘prefixed’ through the REMAP_SCHEMA option) and insert it into the target schema’s (the ones that have been adjusted with the VPD mechanism)

- statements to re-enable all the active constraints on the to be loaded tables

After making connection for the business unit user that must have its data loaded into the VPD enabled target database, the generated statements are executed. This connection is necessary because it sets the right value for the BUSINESS_UNIT_VPD_ID into the session context. As explained before this value is then used to set the value of this column in the tables.

Cleanup after loading the data

After the data for a business unit has been loaded successfully into the VPD enabled target schemas, the ‘prefixed’ schemas can be removed from the database.

Some of the issues to address

With the mentioned definitions above in place, the Virtual Private Database mechanism is enabled. But to be able to load the data of the different business units into the VPD enabled tables some issues must still be addressed first. These issues are what is looked into next.

Data structure differences

One of the most difficult things to address is solving the differences in the data structures for the business units of which the data is to be migrated. In the project I was part of, the data structures that were in scope appeared to be identical, making this part a relatively easy one.

Quite an amount of analysis will be needed though to find out the differences that may exist between the data sources of the business units. When differences are found, a strategy to cope with these will be needed. Think for starters in bringing the structures of the business units to the same version first if this is possible, and then see if differences still exist. Because applications may depend on these data structures and specific versions this may be a huge project in itself.

Primary and foreign key constraint definitions

Loading the data of the different business units into a single VPD enabled database can result in duplicate data errors, because of failing constraint definitions. To overcome this issue, the primary key constraint and unique constraints defined upon the tables must be enhanced with the business unit identifier (the BUSINESS_UNIT_VPD_ID column, which was added to the tables in the last step of the previous section). In this section the focus is on the handling of primary key constraints. The handling of unique constraints is described later on.

As the definition of the primary keys are enhanced with the business unit identifier (the BUSINESS_UNIT_VPD_ID column), consequently also the foreign key constraints that are referencing these primary keys need to be extended.

To query all the primary keys constraints for the tables that have been altered previously, and thus now contain the BUSINESS_UNIT_VPD_ID column, the following query can be used:

SELECT cns.owner AS owner

, cns.table_name AS table_name

, cns.constraint_name AS constraint_name

, LISTAGG( ccs.column_name, ', ') within group (order by ccs.position) AS columns_in_pk

FROM dba_constraints cns

, dba_cons_columns ccs

, dba_tab_columns dtc

WHERE cns.constraint_name = ccs.constraint_name

AND cns.owner= ccs.owner

AND cns.table_name = ccs.table_name

AND cns.owner = dtc.owner

AND cns.table_name = dtc.table_name

AND dtc.column_name = 'BUSINESS_UNIT_VPD_ID'

AND cns.owner = :b_owner

AND cns.constraint_type = 'P'

GROUP BY cns.owner,

, cns.table_name

, cns.constraint_name;

To be able to extend a primary key constraint with an additional column (BUSINESS_UNIT_VPD_ID), the foreign key constraints that reference it must be dropped first. After extending the primary key, the foreign key constraints can be recreated from its current definition with the additional column added.

The next query can be used to find out the details that can/must be used for recreating the foreign key constraints that reference the primary key constraint which is passed in as a parameter:

SELECT cns.r_owner AS fkey_table_owner

, cns.table_name AS fkey_table_name

, cns.constraint_name AS fkey_constraint_name

, cns.delete_rule AS delete_rule

, LISTAGG( distinct ccs.column_name, ', ') WITHIN GROUP (order by ccs.position) AS columns_in_fkey

, ccs_pk.owner AS pkey_table_owner

, ccs_pk.table_name AS pkey_table_name

, ccs_pk.constraint_name AS pkey_constraint_name

, LISTAGG( distinct ccs_pk.column_name, ', ') within group (order by ccs_pk.position) columns_in_pkey

FROM dba_constraints cns

, dba_cons_columns ccs

, dba_cons_columns ccs_pk

WHERE cns.r_constraint_name = :b_pkey_constraint_name

AND cns.r_owner= :b_pkey_owner

AND cns.constraint_type = 'R'

AND cns.constraint_name = ccs.constraint_name

AND ccs.owner = cns.owner

AND ccs.table_name = cns.table_name

AND ccs_pk.constraint_name = cns.r_constraint_name

AND ccs_pk.owner = cns.owner

GROUP BY cns.r_owner

, cns.table_name

, cns.constraint_name

, cns.delete_rule

, ccs_pk.owner

, ccs_pk.table_name

, ccs_pk.constraint_name;

Now the details for recreating the foreign keys to a primary key are known, these objects can be dropped and recreated.

-- drop the foreign key constraints that reference the primary key

-- (note that this can be statements for multiple objects)

ALTER TABLE fkey_owner.fkey_table_name

DROP CONSTRAINT fkey_constraint_name;

-- then, drop the primary key

ALTER TABLE pkey_table_owner.pkey_table_name

DROP CONSTRAINT pkey_constraint_name;

-- recreate the primary key (including the BUSINESS_UNIT_VPD_ID)

ALTER TABLE pkey_table_owner.owner.pkey_table_name

ADD CONSTRAINT pkey_constraint_name

PRIMARY KEY (columns_in_pkey, BUSINESS_UNIT_VPD_ID);

-- recreate the foreign key constraints that were dropped above (including the BUSINESS_UNIT_VPD_ID).

-- If the column ‘delete_rule’ has the value ‘CASCADE’ than add the ‘ON DELETE CASCADE’ to the statement.

ALTER TABLE fkey_table_owner.fkey_table_name

ADD CONSTRAINT fkey_constraint_name

FOREIGN KEY (columns_in_fkey, BUSINESS_UNIT_VPD_ID)

REFERENCES pkey_table_owner.pkey_table_name(columns_in_pkey, BUSINESS_UNIT_VPD_ID)

[' ON DELETE CASCADE']

The optional ‘ ON DELETE CASCADE’ clause should be added only if the queried attribute ‘delete rule’ contains the value ‘CASCADE’.

Sequences

While loading the data of the different business units into a single target, the highest value that is registered in the sequences and the corresponding values in the table columns are likely to go out of sync.

To overcome this issue, the highest value registered on a sequence in the source location (the ‘prefixed ’ schema mentioned earlier) can/should be compared to the value of the same sequence that is in the target location. If the value of the latter is lower than the one in the source location than it should be set to the higher value. This makes sure that, when a sequence is used to set the value of a column, it will get a value that was not given out before.

The below query checks the values of sequences that are in the schema to be loaded and the same sequence in the target schema and determines the value that must be set in the target.

SELECT dss.sequence_owner AS sequence_owner

, dss.sequence_name AS sequence_name

, dss_stg.last_number + 1 AS new_number

FROM dba_sequences dss

, dba_sequences dss_stg

WHERE dss.sequence_owner = :b_target_owner

AND dss_stg.sequence_owner = :b_source_owner

AND dss_stg.sequence_owner = dss.sequence_owner

AND dss.sequence_name = dss_stg.sequence_name

AND dss.last_number < dss_stg.last_number;

Unique indexes

In the database there may exist unique indexes that are not bound to a constraint but are defined on a table that will be managed by the VPD mechanism. To make certain these indexes remain unique and will not cause duplicate data errors, these also should be recreated with the column BUSINESS_UNIT_VPD_ID added to its definition.

The following query searches for the indexes on a per schema basis:

SELECT dis.owner AS index_owner

, dis.index_name AS index_name

, dis.table_name AS table_name

, dis.table_owner AS table_owner

FROM dba_indexes dis

, dba_tab_columns dtc

WHERE dis.owner = :b_owner

AND dis.table_name = nvl(:b_table_name, dis.table_name)

AND dis.constraint_index = 'NO'

AND dis.uniqueness = 'UNIQUE'

AND dis.index_type = 'NORMAL'

AND dtc.owner = dis.owner

AND dtc.table_name = dis.table_name

AND dtc.column_name = 'BUSINESS_UNIT_VPD_ID';

For the found index names the current definition must be queried so these can be recreated after being dropped

SELECT aic.index_owner

, aic.index_name

, aic.table_owner

, aic.table_name

, aic.column_name

, aic.column_position

, aic.descend

, ai.uniqueness

FROM all_indexes ai

, all_ind_columns aic

WHERE ai.owner = :b_index_owner

AND ai.index_name = :b_index_name

AND aic.table_owner = ai.table_owner

AND aic.index_name = ai.index_name

AND aic.index_owner = ai.owner;

For recreation of the index, also find out if all of its columns are nullable or not (the BUSINESS_UNIT_VPD_ID column excluded from this)

SELECT atc.column_name AS column_name

, atc.nullable AS nullable

FROM all_tab_columns atc

, dba_ind_columns dic

WHERE dic.index_owner = :b_index_owner

AND dic.index_name = :b_index_name

AND atc.table_name = dic.table_name

AND atc.owner = dic.table_owner

AND atc.column_name = dic.column_name;

Now the index can be dropped and then recreated

DROP INDEX owner.index_name;

If the index is not based on columns that are all nullable (the BUSINESS_UNIT_VPD_ID excluded) the index can be recreated as follows:

CREATE UNIQUE INDEX index_owner.index_name

ON table_owner.table_name (column_name…, ‘BUSINESS_UNIT_VPD_ID’);

See the section ‘unique key constraints and indexes that are based on nullable columns’ for the situation where all columns are nullable, because these needs to be recreated slightly different.

Function based indexes

Function based indexes are indexes that store the outcome value of a defined operation on a column(s) and was first introduced in Oracle 8i. Especially those that are marked as being unique must be taken care of when migrating the data of multiple business units into one database.

An example of the DDL for such a function based index is:

CREATE UNIQUE INDEX some_unique_column_idx

ON some_table(UPPER(some_table_column_name));

The following query selects the function based indexes that are defined on the tables that have been enhanced with the BUSINESS_UNIT_VPD_ID column (if not familiar, see the ‘primary and unique constraint definitions’ step for details on this column)

SELECT dis.table_owner AS table_owner

, dis.table_name AS table_name

, dis.owner AS index_owner

, dis.index_name AS index_name

, dis.uniqueness AS uniqueness

, dis.index_type AS index_type

, LISTAGG(dic.column_name||' '||dic.descend, ', ') WITHIN GROUP (order by dic.column_position) AS columns_in_index

FROM dba_indexes dis

, dba_ind_columns dic

, dba_tab_columns dtc

WHERE dis.owner = nvl(:b_owner, dis.owner)

AND dis.index_type like 'FUNCTION%'

AND dis.owner = nvl(:b_owner, dis.owner)

AND dic.index_owner = dis.owner

AND dic.index_name = dis.index_name

AND dtc.owner = dis.table_owner

AND dtc.table_name = dis.table_name

AND dtc.column_name = 'BUSINESS_UNIT_VPD_ID'

GROUP BY dis.table_owner

, dis.table_name

, dis.owner

, dis.index_name

, dis.uniqueness

, dis.index_type;

In the migration project that I was part of only index types with a value of ‘FUNCTION-BASED NORMAL’ were present, and therefore this solution only handles these. If other function based index types than ‘FUNCTION-BASED NORMAL’ are encountered, the right solution for these will have to be decided upon.

The value in the column named columns_in_index will contain something like the following and is not usable when recreating the function based index:

SOME_COLUMN_NAME ASC, SYS_NC00009$ ASC, OTHER_COLUMN_NAME ASC

To find out what the definition is that is bound to this SYS_ value the following query can be run, passing in the values for index_owner and index_name and the position of the SYS_ value in the index (which is 2 in the example).

SELECT die.column_expression AS column_expression

FROM dba_ind_expressions die

WHERE die.index_owner = :b_index_owner

AND die.index_name = :b_index_name

AND die.column_position = :b_column_position;

The resulting answer will show the definition of the function, eg. UPPER(unique_column_name).

From the above query results now drop and recreate the indexes:

DROP INDEX index_owner.index_name;

Just as with the creation of unique indexes in the former section, find out if the index is created for columns that are nullable (the BUSINESS_UNIT_VPD_ID excluded):

SELECT atc.column_name AS column_name

, atc.nullable AS nullable

FROM all_tab_columns atc

, dba_ind_columns dic

WHERE dic.index_owner = :b_index_owner

AND dic.index_name = :b_index_name

AND atc.table_name = dic.table_name

AND atc.owner = dic.table_owner

AND atc.column_name = dic.column_name;

If not all columns are nullable than the index can be recreated as follows:

CREATE [NONUNIQUE, UNIQUE] INDEX index_owner.index_name

ON table_owner.table_name (columns_in_index, ‘BUSINESS_UNIT_VPD_ID’);

See the section ‘unique key constraints and indexes that are based on nullable columns’ for the situation where all columns are nullable, because these needs to be recreated slightly different.

The value [NONUNIQUE, UNIQUE] can be determined/set to the value that is in the column named uniqueness.

Unique key constraints

Very likely, loading data from multiple business units into one target database (the one with the VPD mechanism enabled) will cause duplication errors when the defined unique key constraints, and also the foreign key constraints referencing these, are not acted upon appropriately.

The unique key constraints in this case must be enhanced with the BUSINESS_UNIT_VPD_ID attribute, that was added to the table definitions for VPD enablement. This is also the case for the foreign key constraints that reference these unique key constraints.

The next query finds the unique key constraint definitions for the tables that have been enhanced with the BUSINESS_UNIT_VPD_ID attribute. The constraints that do not have this attribute in the ‘colums_in_uk’ value must be dropped and recreated with the BUSINESS_UNIT_VPD_ID added to its definition.

SELECT cns.table_name AS table_name

, cns.constraint_name AS constraint_name

, LISTAGG( ccs.column_name, ', ') WITHIN GROUP (order by ccs.position) AS columns_in_uk

FROM dba_constraints cns

, dba_cons_columns ccs

, dba_tab_columns dtc

WHERE cns.constraint_name = ccs.constraint_name

AND cns.owner= ccs.owner

AND cns.table_name = ccs.table_name

AND cns.owner = dtc.owner

AND cns.table_name = dtc.table_name

AND dtc.column_name = 'BUSINESS_UNIT_VPD_ID'

AND cns.owner = :b_owner

AND cns.constraint_type = 'U'

GROUP BY cns.table_name

, cns.constraint_name

ORDER BY cns.table_name

, cns.constraint_name;

To find the foreign key constraints that references a unique constraint from the above result, the following query can be used:

SELECT cns.owner AS owner

, cns.constraint_name AS constraint_name

, cns.table_name AS table_name

, cns.r_owner AS r_owner

, cns.r_constraint_name AS r_constraint_name

, cns.status AS status

, cns.delete_rule AS delete_rule

, ccs_r.table_name AS r_table_name

, LISTAGG( distinct ccs.column_name, ', ') WITHIN GROUP (order by ccs.position) AS columns_in_fkey

, LISTAGG( distinct ccs_r.column_name, ', ') WITHIN GROUP (order by ccs_r.position) AS columns_in_ukey

FROM dba_constraints cns

, dba_cons_columns ccs

, dba_cons_columns ccs_r

WHERE cns.owner = :b_owner

AND cns.r_constraint_name = :b_r_constraint_name – the unique constraint name

AND cns.constraint_type = 'R'

AND cns.constraint_name = ccs.constraint_name

AND cns.owner= ccs.owner

AND cns.table_name = ccs.table_name

AND cns.r_constraint_name = ccs_r.constraint_name

AND cns.r_owner= ccs_r.owner

GROUP BY cns.owner

, cns.constraint_name

, cns.table_name

, cns.r_owner

, cns.r_constraint_name

, cns.status

, cns.delete_rule

, ccs_r.table_name;

If references from foreign keys are found for a unique key constraint, then to be able to recreate these constraints with the BUSINESS_UNIT_VPD_ID as an additional column, the foreign key constraints must be dropped first and the unique key constraint last.

ALTER TABLE owner.table_name

DROP CONSTRAINT constraint_name;

Now first recreate the unique constraint

ALTER TABLE owner.table_name

ADD CONSTRAINT constraint_name

UNIQUE( columns_in_uk, BUSINESS_UNIT_VPD_ID);

If the unique constraint is based upon columns that are nullable, than this definition can/will cause issues as will be explained in the following section ‘Unique key constraints and indexes that are based on nullable columns’. In these cases the unique constraint can be replaced with a function based index, as explained in that section.

Then recreate the foreign key constraints

ALTER TABLE owner.table_name

ADD CONSTRAINT constraint_name

FOREIGN KEY (columns_in_fkey, BUSINESS_UNIT_VPD_ID)

REFERENCES (r_owner.r_table_name(columns_in_ukey, BUSINESS_UNIT_VPD_ID)

[' ON DELETE CASCADE']

The optional ‘ ON DELETE CASCADE’ option should be added only if the queried attribute ‘delete rule’ contains the value ‘CASCADE’.

Unique key constraints and indexes that are based on nullable columns.

A database may contain unique key constraints and indexes that are based on sole nullable columns. These definitions are not sound, but they may exist and will cause problems in the VPD enabled database when not dealt with properly.

So what is the matter with these definitions?

In the non VPD database setup there will be no constraint violation when 2 rows are added that have the values for these nullable columns set to null.

But in the VPD enabled database these definitions are enhanced with the business unit identifier (BUSINESS_UNIT_VPD_ID in the examples) that will get its value assigned from the context that was set at database logon (via the service dedicated to a specific business unit as explained earlier) and will not be the null value.

As a result, when 2 rows of data are inserted with the nullable columns holding the null value and the BUSINESS_UNIT_VPD_ID holding a non null value, a constraint error saying that duplicate data is not allowed will be encountered.

To deal with this problem the constraints and indexes involved can be rewritten to a function based index. In this index the column values can be tested if they are equal to null or not, and the outcome can be used to have the BUSINESS_UNIT_VPD_ID value included in the index or not.

Such a function based index definition looks like the following:

CREATE UNIQUE INDEX index_owner.index_name

ON table_owner.table_name (

"NULLABLE_COLUMN_1",

"NULLABLE_COLUMN_2",

CASE WHEN "NULLABLE_COLUMN_1" IS NULL AND "NULLABLE_COLUMN_2" IS NULL

THEN NULL ELSE "BUSINESS_UNIT_VPD_ID" END

)

Case vs Decode

Originally I used a decode statement to determine the value for the column BUSINESS_UNIT_VPD_ID in the index.

The syntax that used the decode was slightly more complex, as it depended on the datatypes of the columns that were in the test to determine if all of these contained the null value.

CREATE UNIQUE INDEX index_owner.index_name

ON table_owner.table_name(optional_numeric_column, optional_varchar_column,

DECODE(nvl(to_char(optional_numeric_column), '') || nvl(optional_varchar_column),’’) , '', null,

BUSINESS_UNIT_VPD_ID));

In the decode the values of the columns are tested if they contain null and if so are translated to ‘’. If the concatenation of these result in ‘’ as well then this means that all are equal to null, which results in a null value in the index, or the BUSINESS_UNIT_VPD_ID value otherwise.

Then a colleague of mine suggested to use the CASE statement instead of the DECODE.

After examining this possibility it became clear that the equivalent syntax writes/reads easier. Also because CASE is pure SQL it was chosen in favor of the DECODE variant.

DECODE can perform an equality check only, CASE can work with other logical operators as well, e.g., < ,> ,BETWEEN , LIKE.

CASE was introduced in Oracle 8.1.6 and is a sql statement, prior to that version only DECODE was available and is an Oracle function that only can be used in SQL.

Columns that have the Long type

Oracle has recommended to convert existing LONG columns to LOB (CLOB, NCLOB) columns a long time ago. Long columns are supported only for only backwards compatibility reason. As a result you may still encounter columns that have not been migrated yet and now is a good time to take care of this.

Long columns can contain up to 2 gigabytes of information. Lob columns can store up to 16 TBs, it holds a pointer to an index in the LOBINDEX segment, which points to a piece of data stored in the LOBSEGMENT segment. A lob is made up of one or more pieces of data.

The below query selects the tables that still have a column with the long datatype and generates an alter table statement for each. The statements can then be run to alter the column definitions in the target database (the one that has VPD enabled).

Columns that have the long data type are modified to clob (character large object) and columns with the long raw type data type are modified to blob (binary large object).

SELECT 'alter table ' ||

dt.owner || '.' ||

dt.table_name ||

' modify ( ' || dtc.column_name || ' ' ||

decode(dtc.data_type,'LONG','CLOB','LONG RAW','BLOB') ||

');'

FROM dba_tab_columns dtc JOIN

dba_tables dt ON (dt.owner = dtc.owner and dt.table_name = dtc.table_name) JOIN

vpd_admin.vpd_schemas vs on dt.owner = vs.vpd_schema

WHERE dtc.data_type like 'LONG%';

Now the target database no longer has tables with long (raw) as datatype.

Loading the data

As said, there exists a prerequisite for connecting as the business unit via its dedicated service before running the statements that will load the data for it into the VPD enabled target tables. It is essential because it will set the value for the business unit identifier (the BUSINESS_UNIT_VPD_ID) to its correct value.

Virtual columns

As told earlier, custom made scripts were used to load the data from the tables in the ‘prefixed’ schemas into the VPD enabled target tables.

While doing so, we encountered tables that hold virtual columns. Virtual columns contain/show values that are derived from other columns in the table and do not physically exist in the table.

As a consequence these columns must not be part of the INSERT INTO statements to be generated for loading the data into the VPD enabled target tables. So this makes generating the INSERT INTO statements a slight more complex because it will have to explicitly states the column names that are to be filled and queried, leaving the virtual column name out.

Thus instead of generating a statement like

INSERT INTO table_owner.table_name

SELECT * FROM stg_table_owner.table_name;

the alternative statement like

INSERT INTO table_owner.table_name (column1, column2, …)

SELECT column1, column2, … FROM stg_table_owner.table_name ;

must be generated.

For determining the virtual column this statement can be used:

SELECT dtc.column_name AS column_name

FROM dba_tab_cols dtc

WHERE dtc.owner = :b_owner

AND dtc.table_name = :b_table_name

AND dtc.virtual_column = 'YES';

Result and wrap up

After handling the issues encountered while preparing for the data migration, loading the data into one target Oracle 19c Virtual Private Database went quite smoothly. This is mainly because the source data structures were identical, and so the target data structure could remain the same. If this had not been the case, then a merged data structure would have been needed as a target. This causes that all the applications that are making use of it would likely have to be adjusted as well, increasing the effort needed to successfully migrate a lot.

Furthermore, I want to point out that the issues that were mentioned in this article are a just summarization. By no means are these meant as a complete list. So when you are planning on doing such a migration yourself, you will still have to do your own analysis and deal with the issues that occur. Of course, I hope you will be able to apply some of the solutions if you experience similar issues.

The post Preparation for migrating data to Oracle Virtual Private Database appeared first on AMIS, Data Driven Blog - Oracle & Microsoft Azure.

Step 1 invokes a function to add the toolbar to every page in the browser context, every time after the page has navigated or reloaded and the DOM has been rebuilt. This toolbar has controls to run a scenario (as well as to switch between scenarios, pause execution, reset a scenario). The toolbar is passed the director function; this function handles toolbar events regarding scenario execution.

Step 1 invokes a function to add the toolbar to every page in the browser context, every time after the page has navigated or reloaded and the DOM has been rebuilt. This toolbar has controls to run a scenario (as well as to switch between scenarios, pause execution, reset a scenario). The toolbar is passed the director function; this function handles toolbar events regarding scenario execution. The director() function is at the heart of things: it handles the toolbar events regarding the scenarios (play, reset, skip, pause,switch). These events are captured in the browser context and passed to the NodeJS context – from the onclick event handlers on the toolbar links. This statement creates the bridge from Browser back to NodeJS:

The director() function is at the heart of things: it handles the toolbar events regarding the scenarios (play, reset, skip, pause,switch). These events are captured in the browser context and passed to the NodeJS context – from the onclick event handlers on the toolbar links. This statement creates the bridge from Browser back to NodeJS: